Welcome to ANNE - the Artificial Neural Net Engine - WIP thread. The goal of ANNE is to provide the foundational functions for a learning and evolving neural net engine. The functions can then be used like any code in any project.

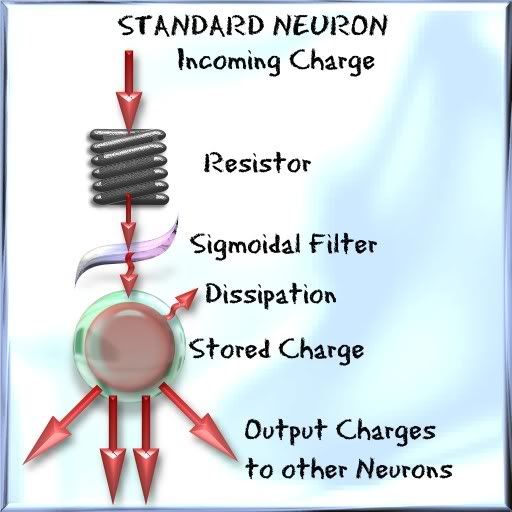

First, let me cover how an Artificial Neuron works. There are some basic components that make up each Neuron. The neuron learns and evolves by changing these components. Of course, we want to make these changes in a logical manner so as to speed up the process. But that is getting ahead. Take a look at the following diagram.

This is a Standard Neuron with the following components:

>Resistor

>Sigmoidal Curve

>"Capacitor" (holds the charge)

>>Threshold (point at which the charge is released)

>Dissipation

>Output Charge

The resistor reduces each incoming charge by a given factor. This way, a neuron can either allow in the full impact of a charge (division by 1) or reduce the charge. This is useful if there are multiple neurons providing input to a single neuron.

Let me take a step back here; when talking about a "charge", as far as an artificial neuron is concerned, this is simply a numeric value that is being manipulated, stored, or moved.

The sigmoidal curve helps to separate and standardize an incoming charge. The sigmoidal curve provides an inverted standard distribution between 0 and 1 with the low point at 0.5. This means most charges will either be converted to near 0 or near 1 and tend to stay away from the .5 mid range. So each incoming charge will likely add a lot or add very little charge to the neuron.

The Capacitor stores the charge. This just simply stores the number for future use. The charge continues to build up until a set threshold is reached. Once a neuron releases a charge, the capacitor is emptied to start building up a new charge.

Dissipation is the rate at which the charge in the capacitor is reduced. This helps prevent neurons from

always firing at some point or another.

Output charge is the value that the neuron puts out when it fires. When the charge in the neuron's capacitor reaches the threshold value, the charge value is sent to four other neurons, and the process starts over with those new neurons.

By manipulating the above neuron components, each neuron can provide a vast amount of data via it's single output charge.

So far, we have input and output of charge (or numerical values). But what good does that do us? Not much unless there is an action at the end of a neural "path". This is where a Terminal Neuron comes in. Terminal Neurons do not pass data on to other Neurons. Instead, Terminal Neurons activate functions. Take a look at the following picture:

As you can see, the Neuron is almost identical to a standard neuron. However, in place of the Output Charges, the Terminal Neuron activates a function. This can be anything we can write a function for. For example:

Turn_Me_Left(Me as DWord)

Turn Object Left Me,.1

Endfunction

Turn_Me_Right(Me as DWord)

Turn Object Right Me,.1

Endfunction

Move_Me_Forward(Me as DWord)

Move Object Me,.1

Endfunction

Pretty cool! Now we can code a Neural Network to control an object to move around. But, you are probably asking, how does the Neural Net learn or evolve?

Two things have to happen for the Neural Net to learn/evolve. Change has to take place. This means the components of each neuron have to be altered. But random altering would take forever to run through all the possible permutations. We really don't want to wait a few billion years for this to happen. So we need the second part as well:

Scoring. Basically, this is how the neurons know if they are doing well or not. Think of it as positive and negative reinforcement. As a component does well, it solidifies the value and resists change. As a component does poorly, it demands change to a value that performs better.

In ANNE, scoring takes place on two levels. The first is on an ongoing basis for the high-level neuron structure. This means that there are 10000 neuron structures available to choose from. Each of the 10000 structures are scored on a continuous basis. As neuron performance demands change, a neuron can pull a new structure from those available. Each structure keeps a score of how well it does. Eventually, only the structures that do well will be selected by neurons needing a new structure. This is the evolutionary process. The strong neuron structures come out ahead and eventually will replace mos tor all of the weaker neuron structures.

The second level is at the component level. Each component has a default value, which is temporarily stored as an "old value". This old value is scored for a period of time. Then a "new value" is generated, which is simply a nudge in either the + or - direction. The new value is scored for the same period of time. Then the scores a compared. If the new value scored better, then the new value replaces the old value. Otherwise, the old value stays in place.

This process is called "training" and is done by "Instructor Neurons" (or INeurons). There are only a few Instructor Neurons at any given time because of the resources required in the training process. Some INeurons train for short periods of time, some train for medium periods of time, and others train for long periods of time. During the training period, the INeuron will only train a single neuron. Once training is complete, the INeuron moves on to another neuron for training. The process of training an entire network can take some time, so more INeurons are better. But the more INeurons used, the more resources are used as well. So a balance must be found depending on the network size and resources available.

The first phase of the project is to have a single working neural net and demonstrate its capabilities. The second phase will be to expand the functionality so that multiple neural nets can be implemented at the same time. This way several AI entities can take advantage of the neural net in the same program.

Below are some of the base functions. This is enough for the Neurons and simple net, but the INeurons and evolution functions are not implemented yet. It does not do anything yet, but does show the work in progress (and lots of remarks).

`Neurons: Tracks all relevant data to store and fire a "neuron charge" as well as

` Linked Neurons and triggered function data

` Seed: This is the base DNA seed used to "prime" the neuron. Neuron DNA can be modified later by Instructor Neurons

` Charge: This is the "electrical" charge stored in the Neuron

` Curve: The curve modifies incoming electrical charges using a sigmoidal function.

` Threshold: The value at which the Charge will cause the Neuron to fire.

` Resistance: A divisor that reduces an incoming charge by a given amount before applying the sigmoidal curve modification.

` Output: This is output charge created by the Neuron. It is independant of any input charges and is not divided out by the number of liked Neurons.

` Links: These track which Neurons the Neuron is linked to.

` Dissipation: This reduces the charge each cycle. This prevents Neurons from always firing regardless of the input speed.

` Function Flag: A boolean operator that indicates if the Neuron is a terminator Neuron that activates a function.

` FID: Function ID used by the DFE to call a given function.

` PID: Parameter ID used by the ANNEngine to store parameters (used for performance).

Type Neurons

Seed as Integer

Charge as Float

Curve as Float

Threshold as Float

Resistance as Float

Output as Float

Link1 as DWord

Link2 as DWord

Link3 as DWord

Link4 as DWord

Dissipation as Float

FunctionFlag as Boolean

FID as DWord

PID as DWord

Endtype

`Scoring: Used by Instructor Neurons (INeurons) to record individual "gene" performace

` OldVal/NewVal: Used to track old values and new values being used in any given test run.

` OldScore/NewScore: Tracks old value scores and new value scores to see if the new gene is better (or not) than the old gene.

Type Scoring

OldVal as Float

NewVal as Float

OldScore as Integer

NewScore as Integer

Endtype

`Instructor_Neirons: These are used to make slight modifications to any given neuron's gene sequence.

` NID: Tracks which Nueron the Instructor is currently "training."

` Epoch Count: How many "training" cycles have passed since the start of the training.

` Epoch Limit: This is how long the "Instructor" will train the Neuron. Instructors can be set for short, medium, or long term training.

` The remaining values store how well the related "genes" (of the smae name) perform.

Type Instructor_Neurons

NID as DWord

Epoch_Count as DWord

Epoch_Limit as DWord

Curve as Scoring

Threshold as Scoring

Resistance as Scoring

Output as Scoring

Link1 as Scoring

Link2 as Scoring

Link3 as Scoring

Link4 as Scoring

Dissipation as Scoring

Endtype

`Initializes the ANNEngine by establishing all the arrays.

`Also can be used as a check to see if the ANNEngine is already initialized, and if not, initilize it.

Function Initialize_ANNE()

`Init_ANNE(0): Tracks if the ANNEngine has been initialized

Dim Init_ANNE(0)

If Init_ANNE(0)<>0 Then Exitfunction

Init_ANNE(0)=1

`Neurons(): The heart of the engine. These store and fire "electrical" charges to linked neurons.

Dim Neuron(-1) as Neurons

`INeurons: Instructional Neurons that track how well a particular Neuron's internal DNA sequence is doing.

Dim INeuron(-1) as Instructor_Neurons

`ANNEParameters(): Terminator Neurons can activate functions. This array stores the given parameters.

Dim ANNEParamters(-1) as String

`DNA(0): Temporarily holds a "DNA sequence" for seeding a Neuron.

Dim DNA(0) as Neurons

`DNAScores(): Tracks how well a particular DNA sequence has done historically.

` Poor performing sequences are less likely to be selected for a new or re-coded Neuron

Dim DNAScores(10000) as Word

`MAxDNAScore(0): Tracks the "best performaning" DNA score - used for comparison and selection.

Dim MaxDNAScore(0) as Word

EndFunction

` Make_Neuron(): Creates a single Neuron. The Neuron will select a "preferred" gene sequence and mirror that code.

` The neuron will also automaitcally link itself to 4 other neurons.

Function Make_Neuron()

Initialize_ANNE()

Array Insert At Bottom Neuron()

index=Array Count(Neuron())

Randomize Timer()

Seed=Get_DNA_Seed()

Get_DNA(Seed)

Neuron(index).Curve=DNA(0).Curve

Neuron(index).Threshold=DNA(0).Threshold

Neuron(index).Resistance=DNA(0).Resistance

Neuron(index).Output=DNA(0).Output

Neuron(index).Dissipation=DNA(0).Dissipation

If index<1 Then L1=1 Else L1=rnd(index-1)

If index<2 Then L2=2 Else L2=rnd(index-1)

If index<3 Then L3=3 Else L3=rnd(index-1)

If index<4 Then L1=4 Else L4=rnd(index-1)

Neuron(index).Link1=L1

Neuron(index).Link2=L2

Neuron(index).Link3=L3

Neuron(index).Link4=L4

Endfunction index

`Make_Terminal_Neuron(Function$,Parameter$): Creates a terminating neuron. Terminal neurons have all the genes of

` a regular neuron (above), but they do not link to other neurons. Instead, a terminal neuron activate functions.

Function Make_Terminal_Neuron(Function$ as string,Parameter as string)

Initialize_ANNE()

Array Insert At Bottom Neuron()

index=Array Count(Neuron())

Neuron(index).Curve=1

Neuron(index).Threshold=5

Neuron(index).Resistance=0

Neuron(index).Output=1

Neuron(index).Link1=99999

Neuron(index).Link2=99999

Neuron(index).Link3=99999

Neuron(index).Link4=99999

Neuron(index).Dissipation=.01

Neuron(index).FunctionFlag=1

Neuron(index).FID=_Get_FID(Function$)

Array Insert At Bottom ANNEParamters()

PID=Array Count(ANNEParamters())

ANNEParamters(PID)=Parameter

Neuron(index).PID=PID

Endfunction index

`Add_Charge(NID): Add a charge to the specified neuron: NID = Neuron ID.

Function Add_Charge(NID as DWord,Charge#)

If Not Valid_Neuron(NID) Then Exitfunction

Charge#=Charge#/Neuron(NID).Resistance

c#=Sigmoidal(Charge#,Neuron(NID).Curve)

Neuron(NID).Charge=Neuron(NID).Charge+c#

Endfunction

`Fire_Neuron(NID): Checks to see if Neuron is ready to fire (has enough charge). If the neuron fires,

` the linked neurons will receive the Output charge. If the neuron is a terminal neuron, the function will be activated.

` NID=Neuron ID

Function Fire_Neuron(NID)

If Not Valid_Neuron(NID) Then Exitfunction

If Neuron(NID).Charge>Neuron(NID).Threshold

Add_Charge(Neuron(NID).Link1,Neuron(NID).Output)

Add_Charge(Neuron(NID).Link2,Neuron(NID).Output)

Add_Charge(Neuron(NID).Link3,Neuron(NID).Output)

Add_Charge(Neuron(NID).Link4,Neuron(NID).Output)

If Neuron(NID).FunctionFlag=1

_Add_Function(0,Neuron(NID).FID,ANNEParamters(Neuron(NID).PID))

Endif

Neuron(NID).Charge=0

Endif

Endfunction

`Process_Neurons(): Cycles through all available neurons, processes the neuron firing and Dissipation.

Function Process_Neurons()

Initialize_ANNE()

If Not Valid_Neuron(0) Then Exitfunction

For i = 0 to Array Count(Neuron)

Fire_Neuron(i)

Neuron(i).Charge=Neuron(i).Charge-Neuron(i).Dissipation

Next i

Endfunction

`Validate_Neuron(NID): Returns a 1 if the Neuron ID (NID) is a valid Neuron. Otherwise a 0 is returned.

Function Valid_Neuron(NID as DWord)

Initialize_ANNE()

Flag=1

If Array Count(Neuron())<NID Then Flag=0

Endfunction Flag

`Get_DNA(seed): Returns a sequence of genes stored in DNA() array based on the provided seed value.

Function Get_DNA(seed)

Initialize_ANNE()

Randomize seed

Do

DNA(0).Curve=(rnd(600.0)/100.0)-3.0

If DNA(0).Curve<>0 Then Exit

Loop

DNA(0).Threshold=(rnd(999.0)/100.0)+.01

DNA(0).Resistance=1.0+(Rnd(100.0)/100.0)

Do

DNA(0).Output=(rnd(2000.0)/100.0)-10.0

If DNA(0).Output<>0 Then Exit

Loop

DNA(0).Dissipation=Rnd(100.0)/100.0

Randomize Timer()

Endfunction

`Score_DNA(seed,score): Adds the Score to the given DNA 'seed'. Score can be positive or negative.

Function Score_DNA(seed,score as integer)

Initialize_ANNE()

if seed<0 or seed>10000 then exitfunction

if DNAScore(seed)+score>MaxDNAScore(0)

MaxDNAScore(0)=DNAScore(seed)+score

Endif

If DNAScore(seed)+score<0

DNAScore(seed)=0

Exitfunction

Endif

If DNAScore(seed)+score>%1111111111111111

DNAScore(seed)=%1111111111111111

Exitfunction

Endif

DNAScore(seed)=DNAScore(seed)+score

Endfunction

`Get_DNA_Seed(): Returns a semi-random seed value from the possible range of seeds. As scores for seeds are

` are recorded, only the top-performing seeds are like to be returned. On occasion, a random seed will be

` returned regardless of score.

Function Get_DNA_Seed()

If MaxDNAScore(0)<100

i=rnd(10000)

Exitfunction i

Endif

For i = 0 to 10000

test=(MaxDNAScore(0)+5)-DNAScore(i)

If Rnd(test)<2 Then exit

Next i

if i>10000 then i=Rnd(10000)

Endfunction i

`Sigmoidal(value#,curve#): This formula returns a value between 0 and 1 (exclusive) in an "S" shaped curve.

`The larger the curve# value, the more streched out the curve. Negative curve# values may used, inverting the "S" shaped output.

Function Sigmoidal(value#,curve#)

`the sigmoidal formula. Graph this on a spreadsheet to see how it works.

result#=1.0/(1.0+2.04541^-((value#)/(curve#*.1)))

`The result# is a value between >0 and <1. Curve is the number standard deviations in a normal distribution.

Endfunction result#

Open MMORPG: It's your game!